This year, I-RIM is pleased to announce two thematic challenges, designed to evaluate the real-world impact of cutting-edge research developed by the community.

.

I-RIM 3D will feature two thrilling thematic competitions: human-aware navigation and human-robot collaborative manipulation. Dive into these challenges to push the boundaries of innovation!

Important dates

- Submission deadline:

August 8, 2025August 29,2025 - Notification of acceptance:

August 18, 2025September 8, 2025 - Challenges dates: 17-19 October 2025

Program:

The Challenges will take place over three days, including team preparation, competitions, and the final evaluation and prize giving.

Friday 17 and Saturday 18 October

10:00 – 19:00 – Team preparation. Participants will have access to the competition area to set up, test, and fine-tune their systems.

Sunday 19 October

10:00 – 11:00 – Human-Aware Navigation Competition

11:00 – 13:00 – Human-Robot Manipulation Competition

13:00 – 13:30 – Award ceremony

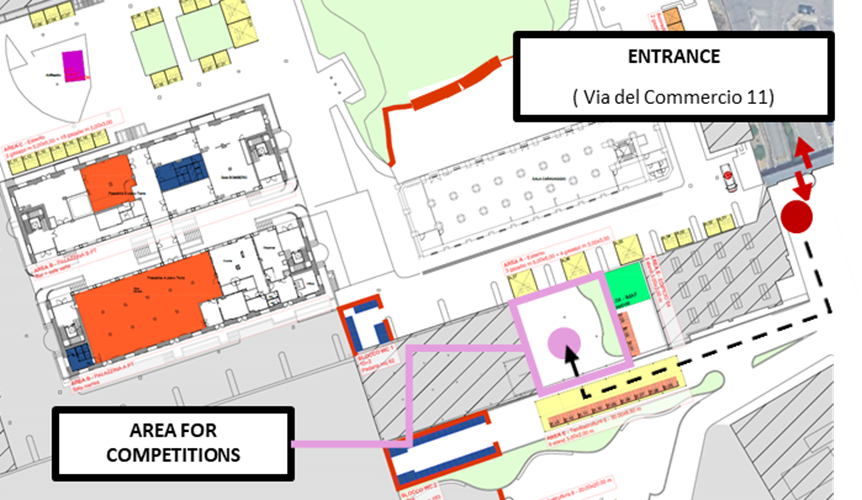

Venue

The Challenges will take place within the Maker Faire at the Gazometro Ostiense.

Entrance: Via del Commercio, 9-11 – 00154 Rome

Information on how to reach the venue can be found in the map below

Industrial sponsor

Enrolment

A maximum number of 5 teams will be accepted for participating in each challenge. Each team can be composed of a maximum of 6 people. All interested teams can register for the competition by sending an email to the organizers indicating the chosen challenge, the names of the team members, and 200 words describing the proposed solution.

Prize

The winning team of each competition will receive a cash prize of 500€.

Organizers and Contacts

1 Human – Aware Navigation Challenge

The Human-Aware Navigation Challenge aims at advancing the development of intelligent navigation systems for service and industrial robots operating in dynamic, human-populated environments.

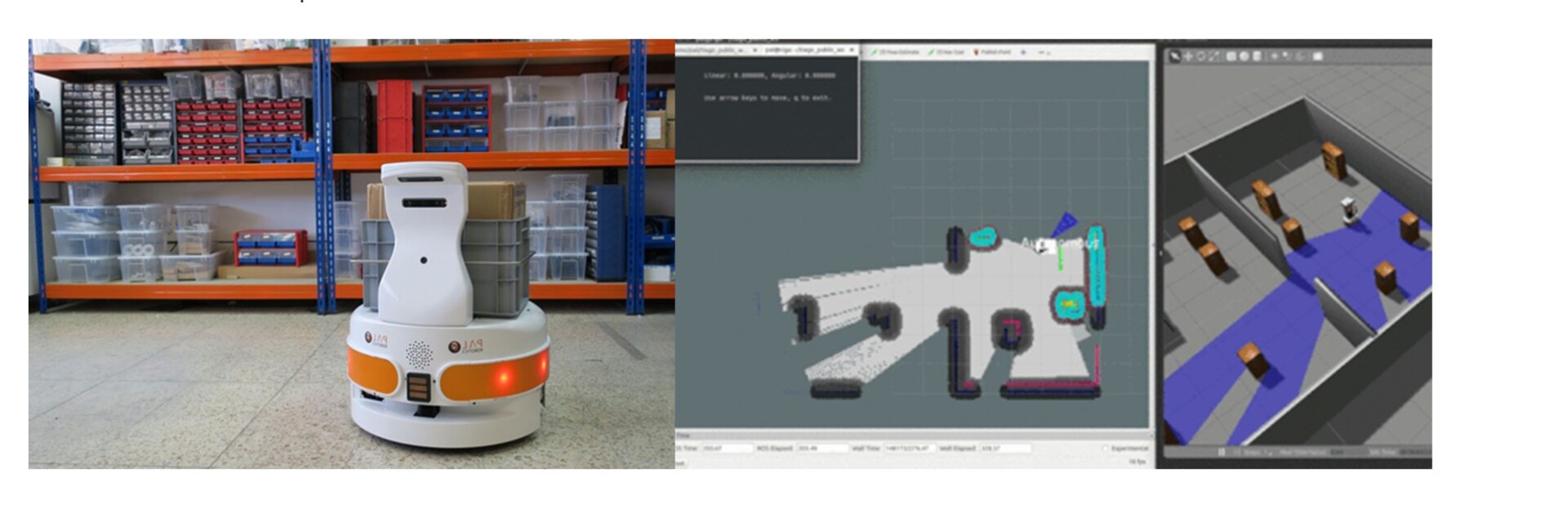

This challenge is supported by PAL Robotics, which will provide the Tiago robot mobile base equipped with navigation sensors like RGB-D camera, Lidar, ultrasound etc.

The competition focuses on the design of intelligent navigation strategies, based on advanced perception and motion planning algorithms, that are both human-aware — capable of recognizing and adapting to the presence and movement of people — and energy-efficient, minimizing trajectory length, energy expenditure, and travel duration. The final goal is to enable robots to move fluidly and safely toward assigned targets, even in dynamic and unpredictable environments..

Description

Participants are tasked with developing advanced perception and motion planning algorithms that allow the Tiago robot to navigate toward a sequence of target positions within an indoor environment populated by moving human agents. The challenge emphasizes the integration of two core modules:

- Advanced World Modeling: Using onboard sensors such as RGB-D cameras, LiDAR, and ultrasonic sensors, the robot must be capable of building and maintaining a detailed representation of the environment. This includes mapping walls, static obstacles, movable objects, and dynamically tracking people in motion. The system should be able to continuously update its internal model to reflect changes in the environment and anticipate possible interactions.

- Energy Efficient and Human-Aware Motion Planning: Given a specific goal, the robot shall be able to reach it, minimizing the energy cost of its path by choosing trajectories that are as short and smooth as possible, avoiding unnecessary accelerations or detours. Moreover, the robot shall be able to predict human presence and adapt its motion accordingly to ensure safety and comfort (e.g., avoiding abrupt stops or unnatural paths). The planner should anticipate human motion rather than merely reacting to it. While preserving safety and minimizing energy, the system must also optimize the time required to reach each target.

Participants will have access to a Gazebo-based simulation environment and a ROS1-compatible workspace. The developed solutions will later be deployed on a real Tiago robot during the final competition phase. A baseline code will be made available via GitHub, including simulation scenarios, human agent models, and initial planning frameworks.

Rules of the Game

- The competition environment will simulate an indoor scenario with static obstacles (e.g., walls and/or furniture) and dynamic human agents with predefined walking patterns.

- The robot will be tasked with reaching a series of target locations within a limited time frame.

- The robot must:

- Avoid collisions with humans and obstacles.

- Choose energy-efficient paths (minimizing both travel duration and length).

- Reach targets in the shortest time possible while balancing energy and safety constraints.

The competition rules may be subject to changes or integrations. For updates and any changes, please consult the GitHub repository.

Software/Code Availability

The simulation environment, where each team can train and test the developed solutions, and the Tiago baseline code will be available to the participants after challenge registration. Setup instructions will be provided with the simulation package. Please check the readme page on the repository for the provided ROS packages description.

The simulation environment will include:

- A Gazebo-based simulation with the Tiago base robot and workplace scenario.

- ROS Noetic compatible baseline packages.

- 3D models of the objects and environment.

- Instructions for setup and testing.

Each team is responsible for developing their own modules for:

- Walls and static objects mapping

- Human detection

- Motion planning and collision avoidance

Groups are allowed to use open-source baseline codes to develop their own modules.

Scoring and Penalties

- Each team will have two attempts, each with a maximum duration (e.g., 5–10 minutes).

- The final score will be computed based on:

- Number of targets reached

- Total time to reach all targets

- Energy consumption (estimated from trajectory length and motion profile).

- Penalties will be applied for:

- Collisions or near-miss situations with humans or static obstacles.

- Excessive detours or inefficient paths.

- Unnatural or abrupt changes in velocity or direction.

Bonus points may be awarded for:

- Most innovative motion planning approach.

- Most reliable performance across trials.

The final score will be calculated as the sum of points for the number of targets reached minus any penalty points plus any bonus points. In case of a tie, the result of the second attempt will serve as a tiebreaker.

.

Q&A and Support

Bi-weekly Q&A sessions will be held (via Microsoft Teams) by the organizers to provide support, clarify rules, and update the baseline code.

For further information, contact:

- Dott. Clemente Lauretti (c.lauretti@unicampus.it)

- Dott. Andrea Pupa (andrea.pupa@unimore.it)

- Dott. Simone Leone (simone.leone@unical.it)

- Prof. Fabio Ruggiero (fabio.ruggiero@unina.it)

- Dott. Domenico Chiaradia (domenico.chiaradia@santannapisa.it)

2 Human – Robot Collaborative Manipulation Challenge

The Human-Robot Collaborative Manipulation Challenge focuses on the development of advanced perception and motion planning algorithms for collaborative manipulation in industrial settings.

The challenge is supported by PAL Robotics, which will provide the Tiago robot equipped with a 7-DoF robotic arm, a parallel gripper and advanced sensors for environment perception, i.e. RGB-D camera.

This challenge targets the design of intelligent manipulation strategies that allow robots to operate safely, efficiently, and effectively in shared workspaces with human operators. The final goal is to enable the robot to perform manipulation tasks while coordinating with human coworkers, ensuring smooth cooperation, collision avoidance, and minimal disruption to human activities.

Description

Participants will develop algorithms that enable the Tiago robot to execute collaborative manipulation tasks in a workspace shared with a human operator. The robot will be asked to manipulate objects (e.g., pick and place components, hold items, or hand over tools) in coordination with human actions. The challenge emphasizes the following core aspects:

- Advanced World Modeling: Using a RGB-D camera, the robot must be capable of constructing and maintaining a detailed and up-to-date representation of the environment. This includes not only the detection and localization of objects to manipulate, but also the ability to identify and avoid obstacles and dynamically track the human operator’s movements throughout the task execution.

- Human-Robot Cooperation: Using advanced motion planning techniques and manipulation control strategies, the robot shall be capable of interacting with human operators safely and effectively. The challenge scenario will reflect realistic industrial operations—such as assembly and disassembly—requiring solutions that are robust, repeatable, and safe. Specifically, the robot will be required to retrieve tools from designated containers and hand them to the human operator while the latter performs assembly tasks. In the same context, the robot may also be asked to take tools from the human and return them to the appropriate containers, acting as a proactive assistant throughout the workflow.

Participants will be provided with a simulation environment built with Gazebo and ROS1, including mock-ups of industrial workbenches, shared tools, and human avatar models performing predefined actions. Final deployment will be carried out on the physical Tiago robot during the challenge event. A baseline repository including simulation scenes and example behaviors will be published on GitHub.

Rules of the Game

The competition will take place in a shared human-robot industrial-like workspace. The environment will include static structures, e.g., workbenches, tool holders, and a human operator performing predefined assembly or disassembly actions.

Each participating team will be required to deploy their solution on the Tiago robot and complete one or more collaborative manipulation tasks within a limited time window.

During the task execution, the robot must:

- Detect and localise tools and objects within the workspace.

- Dynamically track the human operator’s hand and adapt its behaviour accordingly, for handover activities.

- Plan and execute manipulation actions —such as picking tools from containers, handing them to the human, or receiving them and returning them to designated locations—without disrupting the human’s workflow, ensuring safety at all times, and avoiding any potential collisions with the human or the environment.

- Control the robot gripper and arm to ensure safe handling of objects and minimise unnecessary motion.

Different task scenarios will be proposed, including tool handover. Each scenario will be structured with clear initial conditions and goals, and a set time budget.

The competition rules may be subject to changes or integrations. For updates and any changes, please consult the GitHub repository.

Software/Code Availability

The simulation environment, where each team can train and test the developed solutions, and the Tiago baseline code will be available to the participants after challenge registration. Setup instructions will be provided with the simulation package. Please check the readme page on the repository for the provided ROS packages description.

The simulation environment will include:

- A Gazebo-based simulation with the Tiago robot and workplace scenario.

- ROS Noetic compatible baseline packages.

- 3D models of the objects and environment.

- Instructions for setup and testing.

Each team is responsible for developing their own modules for:

- Object detection and recognition.

- Human skeleton tracking

- Grasp and motion planning.

- Gripper control and object placement.

Groups are allowed to use open-source baseline codes to develop their own modules.

Scoring and Penalites

Each team will have two official attempts, each with a maximum duration (e.g., 5–10 minutes depending on scenario complexity).

The final score will be based on:

- Number of completed manipulation actions (e.g., correct tool handed over or placed back).

- The difficulty of the objects handled (e.g., small, reflective, occluded items earn more points).

Penalties will be applied in case of:

- Collisions with the human operator or the environment.

- Missed or repeated attempts at tool handovers.

- Incorrect object selection.

- Damaged or dropped objects.

Bonus points may be awarded for:

- Most innovative cooperative approach.

- Most reliable performance across trials.

The final score will be calculated as the sum of points for completed manipulation actions minus any penalty points plus any bonus points. In case of a tie, the result of the second attempt will serve as a tiebreaker.

Q&A and Support

Bi-weekly Q&A sessions will be held (via Microsoft Teams) by the organizers to provide support, clarify rules, and update the baseline code.

For further information, contact:

- Dott. Clemente Lauretti (c.lauretti@unicampus.it)

- Dott. Andrea Pupa (andrea.pupa@unimore.it)

- Dott. Simone Leone (simone.leone@unical.it)

- Prof. Fabio Ruggiero (fabio.ruggiero@unina.it)

- Dott. Domenico Chiaradia (domenico.chiaradia@santannapisa.it)