Venerdì 20 ottobre, 10:30-11:30

Presentazione di apertura dedicata alla memoria di Jean-Paul Laumond

Seth Hutchinson

Seth Hutchinson is currently Professor and KUKA Chair for Robotics in the School of Interactive Computing, and the Executive Director of the Institute for Robotics and Intelligent machines at the Georgia Institute of Technology.

He is also Emeritus Professor of Electrical and Computer Engineering at the University of Illinois at Urbana-Champaign.

Current CV and Personal Page.

Model-Based Methods in Today’s Data-Driven Robotics Landscape

Data-driven machine learning methods are enabling advances for a range of long-standing problems in robotics, including grasping, legged locomotion, perception, and many others. There are, however, robotics applications for which data-driven methods are less effective, and sometime inappropriate. Data acquisition can be dangerous (to the surrounding workspace, humans in the workspace, or the robot itself), expensive, or excessively time consuming. In such cases, generating data via simulation might seem a natural recourse, but simulation methods come with their own limitations, particularly when complex dynamics are at play, requiring heavy computation and exposing the so-called sim2real gap, or when nondeterministic effects are significant. Another alternative is to rely on a set of demonstrations, limiting the amount of required data by careful curation of the training examples; however, these methods fail when confronted with problems that were not represented in the training examples (so-called out-of-distribution problems), and this precludes the possibility of providing provable performance guarantees.

In this talk, I will describe some specific instances of the difficulties that confront data-driven methods, and present model-based solutions. Along the way, I will also discuss how judicious incorporation of data-driven machine learning tools can enhance performance of these methods. The examples I will describe come from recent work, including flapping flight by a bat-like robot, vision-based control of soft continuum robots, acrobatic maneuvering by quadruped robots, a cable-driven graffiti-painting robot, bipedal locomotion over granular media, and ensuring safe operation of mobile manipulators in HRI scenarios.

Venerdì 20 e sabato 21 ottobre I-RIM e Maker Faire presentano:

“Visions on human-robot intelligence”

Venerdì 15:45-18:30

Sven Behnke

Prof. Dr. Sven Behnke holds since 2008 the chair for Autonomous Intelligent Systems at the University of Bonn and heads the Computer Science Institute VI – Intelligent Systems and Robotics. He graduated in 1997 from Martin-Luther-Universität Halle-Wittenberg (Dipl.-Inform.) and received his doctorate in computer science (Dr. rer. nat.) from Freie Universität Berlin in 2002. In his dissertation “Hierarchical Neural Networks for Image Interpretation” he extended forward deep learning models to recurrent models for visual perception. In 2003 he did postdoctoral research on robust speech recognition at the International Computer Science Institute in Berkeley, CA. In 2004-2008 Professor Behnke led the Emmy Noether Junior Research Group “Humanoid Robots” at Albert-Ludwigs-Universität Freiburg. His research interests include cognitive robotics, computer vision, and machine learning. Prof. Behnke received several Best Paper Awards, three Amazon Research Awards (2018-20), a Google Faculty Research Award (2019), and the Ralf-Dahrendorf- Prize of BMBF for the European Research Area (2019). His team NimbRo has won numerous robot competitions (RoboCup Humanoid Soccer, RoboCup@Home, MBZIRC, ANA Avatar XPRIZE).

From Intuitive Immersive Telepresence Systems to Conscious Service Robots

Intuitive immersive telepresence systems enable transporting human presence to remote locations in real time. The participants of the ANA Avatar XPRIZE competitions developed robotic systems that allow operators to see, hear, and interact with a remote environment in a way that feels as if they are truly there. In the talk, I will present the competition tasks and results. My team NimbRo won the $5M Grand Prize. I will detail our approaches for the operator station, the avatar robot, and the software. While telepresence enables a multitude of applications, such as telemedicine and remote assistance, for other scenarios, autonomy is required. In the second part of my presentation, I argue that consciousness is needed to adapt quickly to novel tasks in open-ended domains and to be aware of own limitations. I will present a research concept for developing conscious service robots that systematically generalize their knowledge and monitor themselves.

Margarita Chli

Margarita Chli is a Professor in Robotic Vision and director of the Vision for Robotics Lab, at the University of Cyprus and ETH Zurich. Her work has contributed to the first vision-based autonomous flight of a small helicopter and the demonstration of collaborative robotic perception for a small swarm of drones. Margarita has given invited keynotes at the World Economic Forum in Davos, TEDx, and ICRA, while she was featured in Robohub’s 2016 list of “25 women in Robotics you need to know about”. In 2023 she won the ERC Consolidator Grant, the most prestigious grant in Europe for blue-sky research, to grow her team at the University of Cyprus to research advanced robotic perception.

Vision-based robotic perception: are we there yet?

As vision plays a key role in how we interpret a situation, developing vision-based perception for robots promises to be a big step towards robotic navigation and intelligence, with a tremendous impact on automating robot navigation. This talk will discuss our recent progress in this area at the Vision for Robotics Lab of ETH Zurich and the University of Cyprus (http://www.v4rl.com), and some of the biggest challenges we are faced with.

Francesco Nori

Francesco is Director of Robotics at Google DeepMind. He received his D.Eng. degree (highest honors) from the University of Padova (Italy) in 2002. During the year 2002 he was a member of the UCLA Vision Lab as a visiting student under the supervision of Prof. Stefano Soatto, University of California Los Angeles. During this collaboration period he started a research activity in the field of computational vision and human motion tracking. In 2003 Francesco Nori started his Ph.D. under the supervision of Prof. Ruggero Frezza at the University of Padova, Italy. During this period the main topic of his research activity was modular control with special attention on biologically inspired control structures. Francesco Nori

An overview of Research and Robotics at Google Deepmind

DeepMind is working on some of the world’s most complex and interesting research challenges, with the ultimate goal of solving artificial general intelligence (AGI). We ultimately want to develop an AGI capable of dealing with a variety of environments. A truly general AGI needs to be able to act on the real world and to learn tasks on real robots. Robotics at DeepMind aims at endowing robots with the ability to learn how to perform complex manipulation and locomotion tasks. This talk will give an introduction to DeepMind with specific focus on robotics, control or reinforcement learning.

Massimo Sartori

Massimo Sartori is a Professor and Chair of Neuromechanical Engineering at the University of Twente where he directs the Neuromechanical Modelling & Engineering Lab. His research focuses on understanding how human movement emerges from the interplay between the nervous and the musculoskeletal systems. His goal is to translate such knowledge for the development of symbiotic wearable robots such as exoskeletons and bionic limbs. On these topics he has obtained blue-sky research fundings (e.g., European Research Council), he has contributed to develop widely used open-source software (e.g., CEINMS, MyoSuite), spin-off initiatives from his Lab and he created patented technology with leading companies (e.g, OttoBock HealthCare). He obtained his PhD in Information Engineering (2011) from the University of Padova (Italy) and he was Visiting Scholar at the University of Western Australia (WA, Australia), Griffith University (QLD, Australia) and Stanford University (CA, USA). He conducted my main post-doc at the University of Göttingen (Germany) where he became Junior Research Group Leader in 2015. In 2017 he joined the University of Twente (The Netherlands) as a tenure-track scientist. Throughout his career he was guest editor in academic journals (e.g. IEEE RA-L, TBME) and was co-organizer of leading congresses in the field (e.g. IEEE BioRob 2018). He is currently chairing the IEEE RAS Technical Committee on BioRobotics. He is Associate Editor at the IEEE TNSRE and IEEE RA-L and member of scientific societies including: IEEE Robotics and Automation Society, IEEE Engineering in Medicine and Biology Society, IEEE International Consortium on Rehabilitation Robotics, and European Society of Biomechanics.

Massimo Sartori is a Professor and Chair of Neuromechanical Engineering at the University of Twente where he directs the Neuromechanical Modelling & Engineering Lab. His research focuses on understanding how human movement emerges from the interplay between the nervous and the musculoskeletal systems. His goal is to translate such knowledge for the development of symbiotic wearable robots such as exoskeletons and bionic limbs. On these topics he has obtained blue-sky research fundings (e.g., European Research Council), he has contributed to develop widely used open-source software (e.g., CEINMS, MyoSuite), spin-off initiatives from his Lab and he created patented technology with leading companies (e.g, OttoBock HealthCare). He obtained his PhD in Information Engineering (2011) from the University of Padova (Italy) and he was Visiting Scholar at the University of Western Australia (WA, Australia), Griffith University (QLD, Australia) and Stanford University (CA, USA). He conducted my main post-doc at the University of Göttingen (Germany) where he became Junior Research Group Leader in 2015. In 2017 he joined the University of Twente (The Netherlands) as a tenure-track scientist. Throughout his career he was guest editor in academic journals (e.g. IEEE RA-L, TBME) and was co-organizer of leading congresses in the field (e.g. IEEE BioRob 2018). He is currently chairing the IEEE RAS Technical Committee on BioRobotics. He is Associate Editor at the IEEE TNSRE and IEEE RA-L and member of scientific societies including: IEEE Robotics and Automation Society, IEEE Engineering in Medicine and Biology Society, IEEE International Consortium on Rehabilitation Robotics, and European Society of Biomechanics.

Modelling the neuromuscular system for enhancing wearable robot-human interaction

Neuromuscular injuries leave millions of people disabled worldwide every year. Wearable robotics has the potential to restore a person’s movement capacity following injury. However, the impact of current technologies is hampered by the limited understanding of how wearable robots interact the human neuromuscular system. That is, current movement-support robots such as exoskeletons interact with the human body with no feedback of how biological targets (e.g., tendons, muscles, motor neurons) react and adapt to robot-induced mechanical or electrical stimuli over time. This talk will outline current work conducted in my Lab to create a new framework for ‘closing-the-loop’ between wearable technology and human biology. Our approach involves combining bio-electrical recordings, numerical modeling, and real-time closed-loop control to investigate the response of the human neuro-muscular system over time to robotic interventions in vivo. This paradigm has the potential to revolutionize the development of effective and safe movement-support technologies for individuals across neuromuscular injuries.

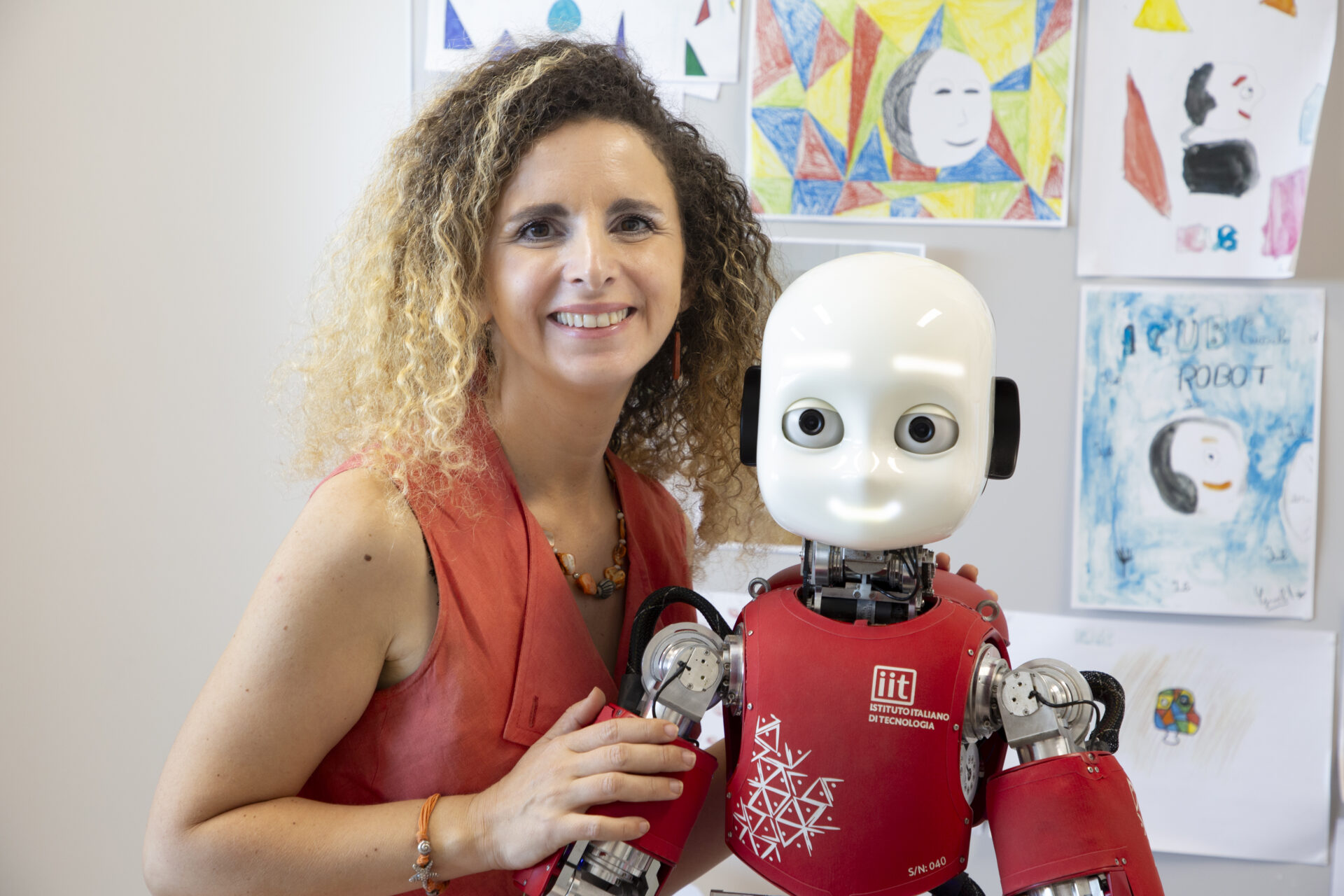

Alessandra Sciutti

Alessandra Sciutti is Tenure Track Researcher, head of the CONTACT (COgNiTive Architecture for Collaborative Technologies) Unit of the Italian Institute of Technology (IIT). She received her B.S and M.S. degrees in Bioengineering and the Ph.D. in Humanoid Technologies from the University of Genova in 2010. After two research periods in USA and Japan, in 2018 she has been awarded the ERC Starting Grant wHiSPER (www.whisperproject.eu), focused on the investigation of joint perception between humans and robots. She published more than 80 papers and abstracts in international journals and conferences and participated in the coordination of the CODEFROR European IRSES project (https://www.codefror.eu/). She is currently Associate Editor for several journals, among which the International Journal of Social Robotics, the IEEE Transactions on Cognitive and Developmental Systems and Cognitive System Research. The scientific aim of her research is to investigate the sensory and motor mechanisms underlying mutual understanding in human-human and human-robot interaction. For more details on her research, as well as the full list of publications please check the Contact Unit website or her Google Scholar profile.

Improving HRI with Cognitive Robotics

This presentation explores the world of cognitive robotics, focusing on empowering robots to establish more intelligent interactions with humans. The aim is to create robots that understand our needs, desires, and intentions while being transparent in their behavior. We discuss how cognitive robots can improve Human-Robot Interaction (HRI) through predictive capabilities, informed decision-making and autonomous adaptation. Drawing inspiration from the natural progression of human cognitive skills since childhood, we propose a developmental approach for designing more human-centric machines better suited at interacting with us.

Sabato 10:30-11:30

Hiroshi Ishiguro

Hiroshi Ishiguro received a Ph. D. from Osaka University, Japan in 1991. He is currently Professor of Department of Systems Innovation at Osaka University, Visiting Director of Hiroshi Ishiguro Laboratories at the Advanced Telecommunications Research Institute (ATR), Project Manager of MOONSHOT R&D Project, Thematic Project Producer of EXPO 2025 Osaka, Kansai, Japan, and CEO of AVITA, Inc. His research interests are interactive robotics, avatar, and android science. Geminoid is an avatar android that is a copy of himself. In 2011, he won the Osaka Cultural Award. In 2015, he received the Prize for Science and Technology by the Minister of Education, Culture, Sports, Science and Technology. He was also awarded the Sheikh Mohammed Bin Rashid Al Maktoum Knowledge Award in Dubai in 2015. Tateisi Award in 2020, and honorary doctorate of Aarhus university in 2021.

Avatar and the future society

The speaker has been involved in research on tele-operated robots, that is, avatars, since around 2000. In particular, research on Geminoid modeled on oneself is not only scientific research that understands the feeling of precence of human beings, but also practical research that allows one to move one’s existence to a remote place and work in a remote place. In this lecture, the speaker will introduce a series of research and development of tele-operated robots such as Geminoid, and discuss in what kind of society humans and robots will coexist in the future.